Coded Distributed Computing

|

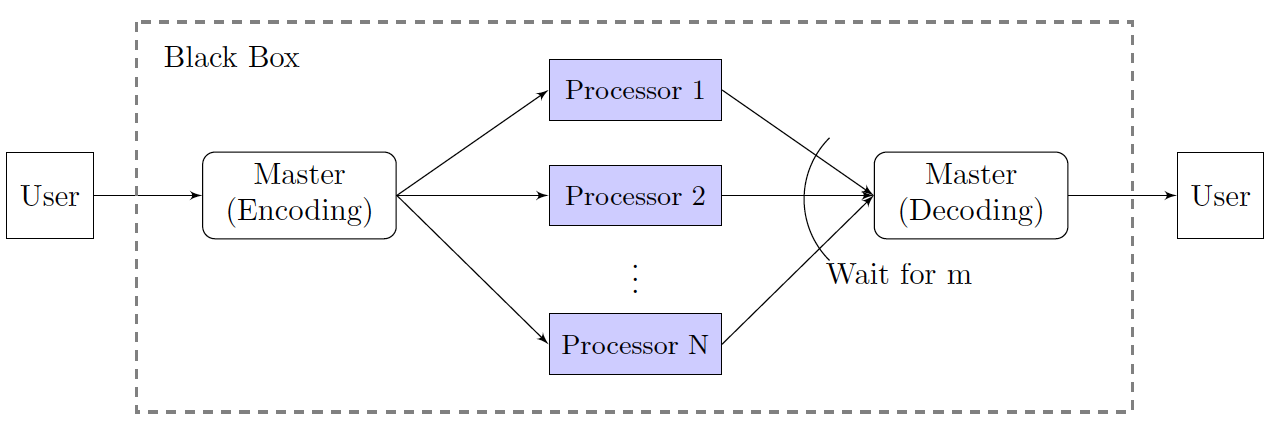

Distributed computing has become the basis for all large-scale computation. The ability to distribute work over many processors and utilize their combined computational power to run large tasks in parallel has become necessary with the increasing size of data sets and task complexity. One of the most serious issues facing distributed computing is that processors take randomly varying amounts of time to finish, which means that all too frequently, when a task is divided into N parts amongst N processors, several will straggle [Dean et. al.]. In these traditional schemes, one needs to wait for all processors to finish computing before the job is done, increasing latency. Most current research in this domain focuses on replicating the work of straggling processors by giving their job to processors that have already finished. However, these approaches increase communication and coordination cost, two bottlenecks in most systems [Kangwook et. al.]. We tackle the straggler problem arising in distributed computing by assigning redundant computations to nodes derived through error correcting codes.

Publications

Conference

K. Lee, M. Lam, R. Pedarsani, D. Papailiopoulos, and K. Ramchandran, “Speeding-up Distributed Machine Learning Using Codes”, Neural Information Processing Systems (NIPS): Workshop on Machine Learning Systems, Montreal, Canada, December, 2015.

K. Lee, M. Lam, R. Pedarsani, D. Papailiopoulos, and K. Ramchandran, “Speeding Up Distributed Machine Learning Using Codes”, IEEE International Symposium on Information Theory (ISIT), Barcelona, Spain, July, 2016.

Preprint

T. Baharav, K. Ramchandran, “Applying Coding Theory to Distributed Matrix Multiplication,” October 2017 (email the authors for a preprint)